An essential task that GenAI is doing within the peek prototype is labeling a persona with segments of different milieu models as well as assuming privileges / diversity traits and identifying stereotypical narratives.

But how does it work? And how do I assure that the result is not completely random with each peek evaluation?

Labelling personas with segments

Let's stick with assigning a segment of a milieu model. Within the prototype, I'm using: Sinus Milieus, Digital Media Types, clåss micromilieus, Die andere Teilung (“The Other Separation”) Social Types, D21 Digital Types

I scraped their publicly available segment descriptions as published by the model providers. You can access the information that I'm using within the prototype here for reference.

For example

sinus_segments = [{

"name": "Performer Milieu",

"profile": {

"description": "The efficiency- and progress-oriented technocratic elite: global economic thinking; liberal social perspective based on personal freedom and responsibility; self-image as lifestyle and consumption trendsetters; high affinity for all things tech and digital.",

"share": "0.1"},

"meta": {

"x-axis": "Re-orientation",

"y-axis": "Upper middle-class"}

},

"name": "Traditional Milieu",

"profile": {

"description": "The security- and order-loving older generation: entrenched in traditional petit-bourgeois and/or working-class culture; undemanding adaptation to necessities; increasing acceptance of the new sustainability norm; self-image as the upstanding and respectable »salt of the earth«.",

"share": "0.09"},

"meta": {

"x-axis": "Tradition",

"y-axis": "Middle middle-class"}

},

{more segments}

]These segments are then joined in a randomized order into a text block and fed through the prompt as context. Utilizing function calling (to generate a structured JSON object) GenAI will complete 3 tasks:

1. assign the segment

2. share a confidence score for the decision

3. explain the decision

Prompt

response = await openai.chat.completions.create(

model="gpt-4o-mini",

temperature=0.9,

n=2,

messages=[

{'role': 'system', 'content': f"The user will provide you with a description of a persona. Assign this persona to one of the segments described below. Explain your decision. Rate your confidence level on a scale of 1 to 10.\n\n The segments are:\n{segments_shuffle}"},

{'role': 'user', 'content': persona}

],

tools=[segment_match],

tool_choice="required",

)Tool: segment_match

segment_match = {

"type": "function",

"function": {

"name": "segment_match",

"description": "Identify the segment that best fits the persona",

"parameters": {

"type": "object",

"properties": {

"name": {

"type": "string",

"description": "Name of the chosen segment"

},

"confidence": {

"type": "string",

"description": "Share a number between 1 and 10 that represents how confident you are with assigning that segment (1 = not confident at all, 10 = absolutely confident)"

},

"reasoning": {

"type": "string",

"description": "Explain how you came to this decision."

}

},

"required": ["label", "confidence", "reasoning"]

}

}

}Input <> Output

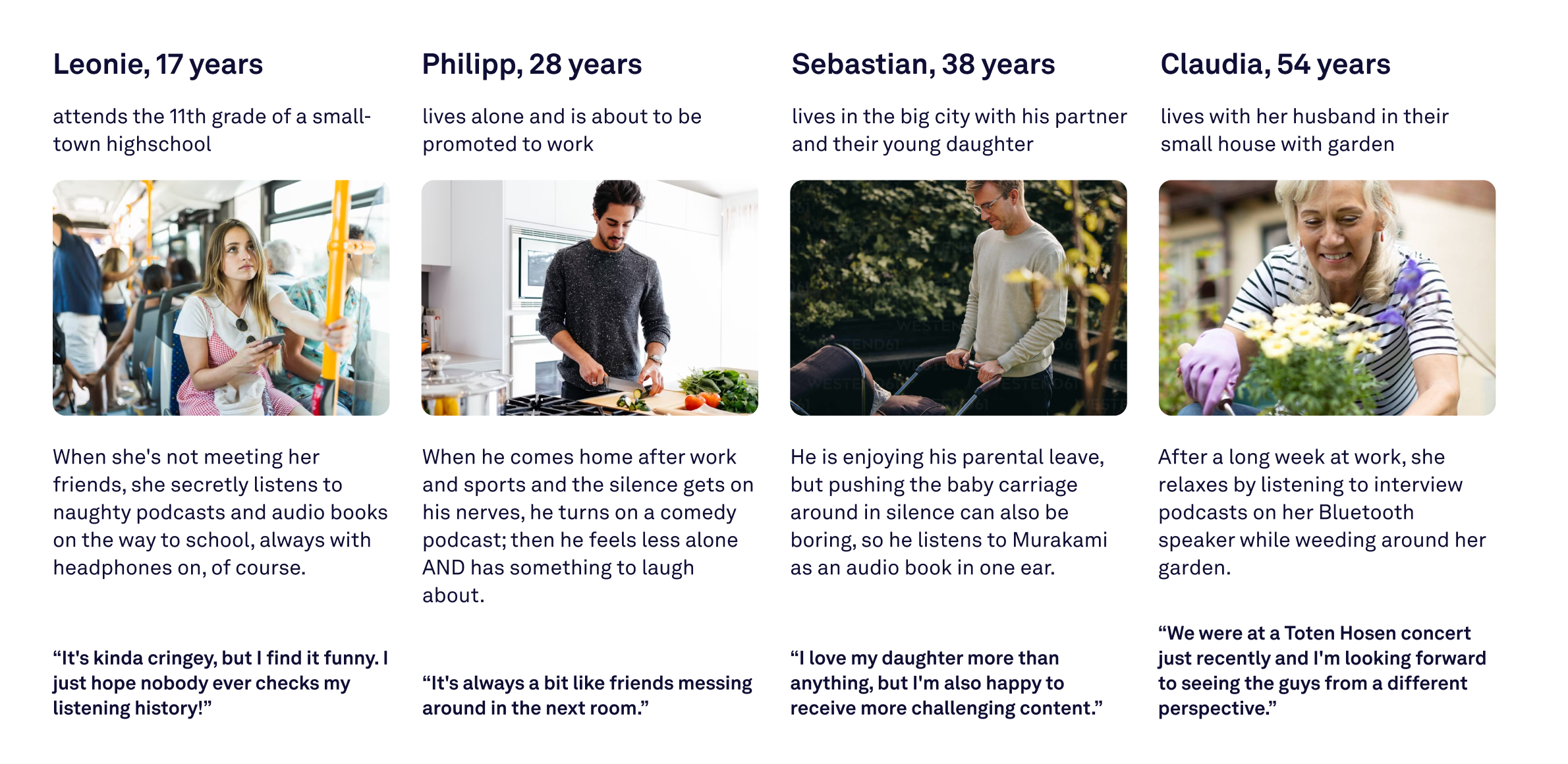

Apart from being translated to English, the following is the final result of assigning a sinus milieu to the persona of Leonie with the data that GenAI extracted from the persona sheet.

For the final assignment of a label, I have GenAI generate overall 24 responses (12 loops with each 2 responses). From there, I'll identify the most often assigned label and its average confidence score.

Input: Example Persona Sheet

Output: After assigning labels

persona_ensemble = [{

'meta': {

'name': 'Leonie',

'age': '17'},

'persona': {

'bio_short': 'attends the 11th grade of a small-town highschool',

'bio_long': 'When she's not meeting her friends, she secretly listens to naughty podcasts and audio books on the way to school, always with headphones on, of course.',

'quote': 'It's kinda cringey, but I find it funny. I just hope nobody ever checks my listening history!',

'img_alt': 'A young woman with long, curly blonde hair sitting on a bus, looking at her smartphone.'},

'segment': [{

'label': 'sinus',

'title': 'Sinus Milieus',

'name': 'Consumer-Hedonistic Milieu',

'avg_confidence': 75.41666666666667,

'reasoning': "Leonie is 17 years old and attends the 11th grade, which suggests that she is in a phase of life that is strongly characterized by social interactions and everyday fun. Her interest in 'naughty podcasts' and secretly listening to them shows a focus on entertainment and fun in the here and now, which is typical of the consumer-hedgonic milieu. Their self-image as someone who is a little ashamed but still enjoys it points to a strong need for recognition and a desire to belong to a certain lifestyle. Therefore, I have come to the conclusion that they fit best into the consumer hedonistic milieu."},

{repeated for the other segmentation models}

]},

{repeated for the other personas}

]Observation

Whether I'm showing the reasoning and confidence score to the user or not (I do), not generating either of them will significantly decrease the chance of the same segment being assigned in repeated runs of the same process with the same data.

As you can see in the reference Airtable of all segments, the amount of publicly available information and the number of segments that I provide as context varies significantly between the models.

And of course, it also influences the reliability of a label assignment.

Exploring label stickiness

This leads me to the idea of label “stickiness”. With that, I mean the reliability of GenAI to assign the same label in repeated runs of the same request.

During initial prototype explorations, I found that stickiness can be measured by two factors:

1. Cohesion: How often was the most popular label was assigned to one persona

2. Variance: How many different labels were assigned to one persona

A third factor would be an expert reference of the "right" label, but this is not feasible with GenAI and analyzing unknown documents. The 24 loops of assigning a label were conceptualized to mimic experience.

In the development, I wanted to measure the label stickiness on a scaled level to gradually optimize prompts and context inputs. Resulting in a simple stickiness formula:

stickiness = avg cohesion * (1 - avg variance / # segment options)For the experiment, I had the model assign labels to each persona for 60 times (60* 4 personas = 240 final assignments; with each assignment repeated 24 times as a background task = 5,760 label assignments), resulting in the following scores:

- Sinus: 82 (96.3% cohesion, ø1.5 segments, 10 options)

- Digital Media Types: 80 (92.5% cohesion, ø1.25 segments, 9 options)

- Die andere Teilung: 73 (96.7% cohesion, ø1.5 segments, 6 options)

- clåss micromilieus: 86 (92.5% cohesion, ø2 segments, 28 options)

- D21: 82 (94.6% cohesion, ø1.75 segments, 6 options)

You can access my QA test results for the analysis in this table.

- Side note

- Doing this analsyis with more than 2.500 API requests and more than 5.000 reponse generations costs about $13.50 on OpenAI Usage Tier 4, utilizing the gpt-4o-mini model. It processed 61M input and generated 9M output tokens.

In comparison

This analysis run was utilizing the final prompts & final context data and recreated for the purpose of this article.

In an earlier run of this experiment, the average stickiness score across milieu models was about 10 points lower, but could be increased with prompt engineering and expanding context. Here are a few original stickiness scores (as I didn't implement all models originally):

- Digital Media Types: 56 (91.3% cohesion, ø1.5 segments, 9 options)

- Die andere Teilung: 62 (85.0% cohesion, ø1.5 segments, 6 options)

- clåss micromilieus: 77 (86.3% cohesion, ø2.5 segments, 28 options)

Tl;dr

Developing a reliable QA system for GenAI applications is very dependent on what you try to achieve. In the case of peek, the prototype focuses on the task of labeling personas with milieu segments.

By assigning labels through iterative runs and calculating a "stickiness score" based on cohesion and variance, the prototype achieved high reliability for repeated label assignments.

Based on a custom “stickiness score”, it became easier for me to evaluate the quality of prompts and context in a structured analysis setup. It also allowed me to see concrete improvements from different prompt iterations and configurations.

While it is time-consuming and token-heavy, it is also worth for the quality improvements if you're looking for a scaled and unsupervised app usage.

More about the peek project